AI Platform Choices

The old world is dying, and the new world struggles to be born: now is the time of monsters.

Background

Unless you are living under a rock and not paying any attention to the news (and who could blame you), you may be thinking that AI is the next industrial revolution. This 'inevitable' change will reshape our world, cure diseases, and unlock unprecedented productivity. Economists are asking if this is an AI bubble. AI experts are warning us that AI presents a risk of human extinction at the same level as nuclear war or climate change. Whether you consider the costs of AI, the amount of AI slop, or AI sabotage, any decision by a business or government to procure and use AI systems requires close examination. I wrote this post to work through if and how to proceed with AI, without being sucked into the AI hype vortex.

The old world is dying, and the new world struggles to be born: now is the time of monsters.

Companies whose products incorporate AI have spent ‘bet the business’ amounts of money building out their data centre infrastructure and training models. I asked Copilot about this, and it generated this table.

| Year | Company | Total AI Investment (Est.) | Data Center Investment | Model Development Highlights |

|---|---|---|---|---|

| 2021 | ~$30B | ~$20B | Early Gemini model R&D | |

| Amazon | ~$25B | ~$18B | AWS AI services expansion | |

| Microsoft | ~$28B | ~$19B | Azure AI, OpenAI partnership | |

| 2022 | ~$35B | ~$22B | Gemini 1 development | |

| Amazon | ~$30B | ~$20B | Bedrock platform launch | |

| Microsoft | ~$32B | ~$21B | GPT-4 integration in Azure | |

| 2023 | ~$45B | ~$30B | Gemini 1.5, TPU v5 launch | |

| Amazon | ~$40B | ~$28B | Titan models, Trainium chips | |

| Microsoft | ~$50B | ~$35B | Copilot rollout, Stargate planning | |

| 2024 | ~$52B | ~$35B | Gemini 1.5 Pro scaling | |

| Amazon | ~$83B | ~$60B | AWS generative AI expansion | |

| Microsoft | ~$65B | ~$45B | GPT-4 Turbo, Azure AI Studio | |

| 2025 | ~$75B | ~$50B | Gemini 2, TPU v6 deployment | |

| Amazon | >$100B | ~$70B | AWS AI superclusters | |

| Microsoft | ~$80B | ~$55B | Stargate supercomputer, Copilot+ | |

| Total | ~$870B+ | ~$613B+ | — |

Copilot Notes for this table

These figures are estimates based on public reports and capital expenditure disclosures.

Data centre investments include infrastructure, GPUs (mostly Nvidia), and cloud scaling.

Model development includes R&D, training costs, and partnerships (e.g., OpenAI for Microsoft).

These companies are naturally looking for a return on that investment. This could involve introducing new solutions that generate additional revenue streams or enhancing existing solutions with "AI" capabilities, and increasing the price. But earlier this year, The Register reported that ChatGPT Pro is “struggling to turn a profit”. Microsoft is charging $30 per user per month for Copilot, which should help offset the nearly $12 billion that Microsoft has invested in ChatGPT, as well as its internal investments in AI. In either event, these costs are being passed on to the customer.

Evaluating AI

Marketing of AI presupposes the inevitability of AI. To question this narrative is to risk being mislabelled a Luddite.[1] But if the current generation of AI ‘solutions’ is a technology bubble inflated by billions in investments on expectations of astronomical returns, what is a prudent approach for moving forward on AI initiatives? It probably involves a combination of the following elements.

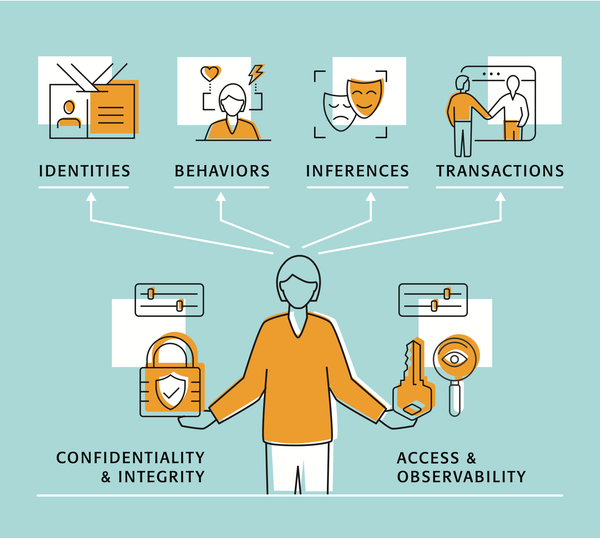

Data Due Diligence

This involves assessing the quality, integrity, and compliance of data used in AI systems, ensuring it meets regulatory standards and is suitable for analysis. This process identifies risks and opportunities related to data management and AI implementation before piloting an AI project. Areas to assess include:

- Data Compliance: Assess adherence to regulations like PIPEDA, CCPA, or GDPR to protect sensitive information.

- Data Quality: Evaluate the accuracy, completeness, and reliability of data used in AI models.

- Data Security: Review cybersecurity measures to safeguard data against breaches and unauthorised access.

- Data Sourcing: Investigate how data is collected, ensuring it is ethical and sustainable for AI applications.

- Intellectual Property: Examine ownership and licensing of data and AI technologies to avoid legal complications.

Pilot Project

Before adopting enterprise-wide AI solutions, you should conduct one or more pilot projects. Consider incorporating these elements in your pilots:

- Setting Clear Objectives: Define specific goals for the pilot project, such as improving efficiency, reducing costs, or enhancing customer experience.

- Stakeholder Engagement: Involve key stakeholders to ensure that the proposed project addresses identified objectives. Where personal data is involved in the model, consider including customer representatives and regulators as stakeholders.

- Data Preparation: Do the pre-work necessary to prepare the data. This includes data selection, cleaning, and transformation. Alternatively, ensure that your vendor can demonstrate that this has been done.

- Model Selection: Choose appropriate AI models and algorithms based on the objectives and data characteristics. Consider factors like complexity, interpretability, and scalability.

- Infrastructure Setup: Establish the necessary technical infrastructure, including hardware, software, and cloud services, to support the selected AI model.

- Test and Validate: Test to validate the model's performance against predefined metrics. This includes cross-validation and performance benchmarking. This is a gateway, and you may have to abandon the project. This is a win if it saves the cost and consequences of an AI solution that doesn’t address what it was supposed to address.

- Monitor and Evaluate: Set up mechanisms to continuously monitor the model's performance and impact during the pilot phase. Evaluate results against the initial objectives.

- Establish a Feedback Loop: Create a process for gathering feedback from users and stakeholders to refine the model and its implementation.

- Risk Management: Conduct an AI Impact Assessment to identify potential risks associated with the AI implementation and develop mitigation strategies.

- Document Everything: Maintain thorough documentation of the project, including methodologies, findings, and lessons learned for future reference.

Governance

Before the pilot project is complete, establish a governance framework to ensure a successful transition from pilot to full-scale operation. This framework helps manage risks, maintain compliance, and ensure that the AI system aligns with organisational goals. Key governance elements include:

| Governance Structure | Establish a clear governance structure that defines roles and responsibilities for AI oversight, including a dedicated AI governance team. |

| Policies and Standards | Develop comprehensive policies and standards for AI usage, including ethical guidelines, data privacy, and security protocols. |

| Compliance Framework | Ensure compliance with relevant regulations and industry standards, such as PIPEDA or the GDPR, to protect data and user rights. |

| Risk Management | Implement a risk management framework to identify, assess, and mitigate risks associated with AI deployment and operation. |

| Performance Monitoring | Set up continuous monitoring of AI system performance against key performance indicators (KPIs) to ensure it meets business objectives. |

| Change Management | Develop a change management process to handle updates, modifications, and improvements to the AI system as needed. |

| Stakeholder Engagement | Maintain ongoing communication with stakeholders to gather feedback, address concerns, and ensure alignment with business goals. |

| Training and Education | Provide training and resources to help employees understand AI systems, their implications, and how to work effectively with them. |

| Audit and Review | Conduct regular audits and reviews of the AI system to assess compliance, performance, and alignment with governance policies. |

| Ethical Oversight | Establish an ethical oversight committee to assess the ethical implications of AI decisions and ensure the responsible use of AI. |

Other AI Risks

Supply Chain

Even if you decide not to proceed with AI, AI is likely in your supply chain. This may expose you to risks that need to be identified and addressed, preferably in your procurement process. Third-party vendors of AI technologies will be subject to the risks mentioned above. That means you have to plan for them failing to deliver, going out of business, or sudden and dramatic pricing changes. You should obtain attestations of data quality and confirm the measures taken to prevent data bias and errors. Suppliers may not adhere to the regulations that apply to your organisation, leading to compliance issues. As with any procurement, you must take steps to ensure your vendor contracts make them responsible for the things you are accountable for.

Shadow AI

Although last in this list, Shadow AI may well be the first place where AI-related risks appear in your enterprise. Shadow IT refers to the use of unauthorised applications, devices, or services within an organisation without the knowledge or approval of the IT department. While it can foster innovation and flexibility, it can also introduce any of the risks identified above without any governance or oversight.

However you decide to proceed, or not, with AI in your organisation, the following will probably apply to whatever AI platform you choose: be as accurate as any new technology:

- It will cost more and take longer than you expect.

- You will find that your vendors have overpromised.

- If you are lucky, you will find unexpected benefits. Otherwise, you are guaranteed to find unexpected consequences.

The original Luddites were skilled technologists fighting the degradation of their work and the depredations of the mill owners, not dissimilar to what is happening with AI (Brian Merchant, Blood in the Machine)↩︎ ↩︎